08:50

Opening Remarks08:50 AM - 09:00 AM

09:00

Invited Talk 109:00 AM - 09:35 AM

Andrea Vedaldi is Professor of Computer Vision and Machine Learning at the

University of Oxford, where he co-leads the Visual Geometry Group since 2012. His

current work focuses on generative AI for 3D computer vision, for generating 3D

objects from text and images as well as the basis for image understanding. He is

author of more than 200 peer-reviewed publications in the top machine vision and

artificial intelligence conferences and journals. He is a recipient of the Mark

Everingham Prize for selfless contributions to the computer vision community, the

Test of Time Award by the ACM for his open source software contributions, and the

best paper award from the Conference on Computer Vision and Pattern Recognition.

09:35

Invited Talk 209:35 AM - 10:10 AM

Professor Katerina Fragkiadaki is an Assistant Professor in the Machine Learning

Department at Carnegie Mellon University. Her research interests lie in building

machines that can understand the stories portrayed in videos, and conversely, using

videos to teach machines about the world. The pen-ultimate goal of her work is to

build a machine that comprehends movie plots, while the ultimate goal is to develop

a machine that would prefer watching the films of Ingmar Bergman over other options.

Prior to joining the faculty at Carnegie Mellon's Machine Learning Department,

Professor Fragkiadaki spent three years as a postdoctoral researcher, first at UC

Berkeley working with Jitendra Malik, and then at Google Research in Mountain View,

where she worked with the video group. She completed her Ph.D. in the GRASP (General

Robotics, Automation, Sensing & Perception) program at the University of

Pennsylvania under the guidance of Jianbo Shi. Her undergraduate studies were

undertaken at the National Technical University of Athens, and before that, she was

in Crete.

Professor Fragkiadaki's academic journey and research interests revolve around

developing machines that can comprehend and interpret the narratives conveyed

through videos, ultimately aiming to create artificial intelligence systems that can

appreciate and engage with complex artistic works like the films of acclaimed

director Ingmar Bergman.

10:10

Coffee & Poster session10:10 AM - 10:40 AM

10:45

Invited Talk 310:45 AM - 11:20 AM

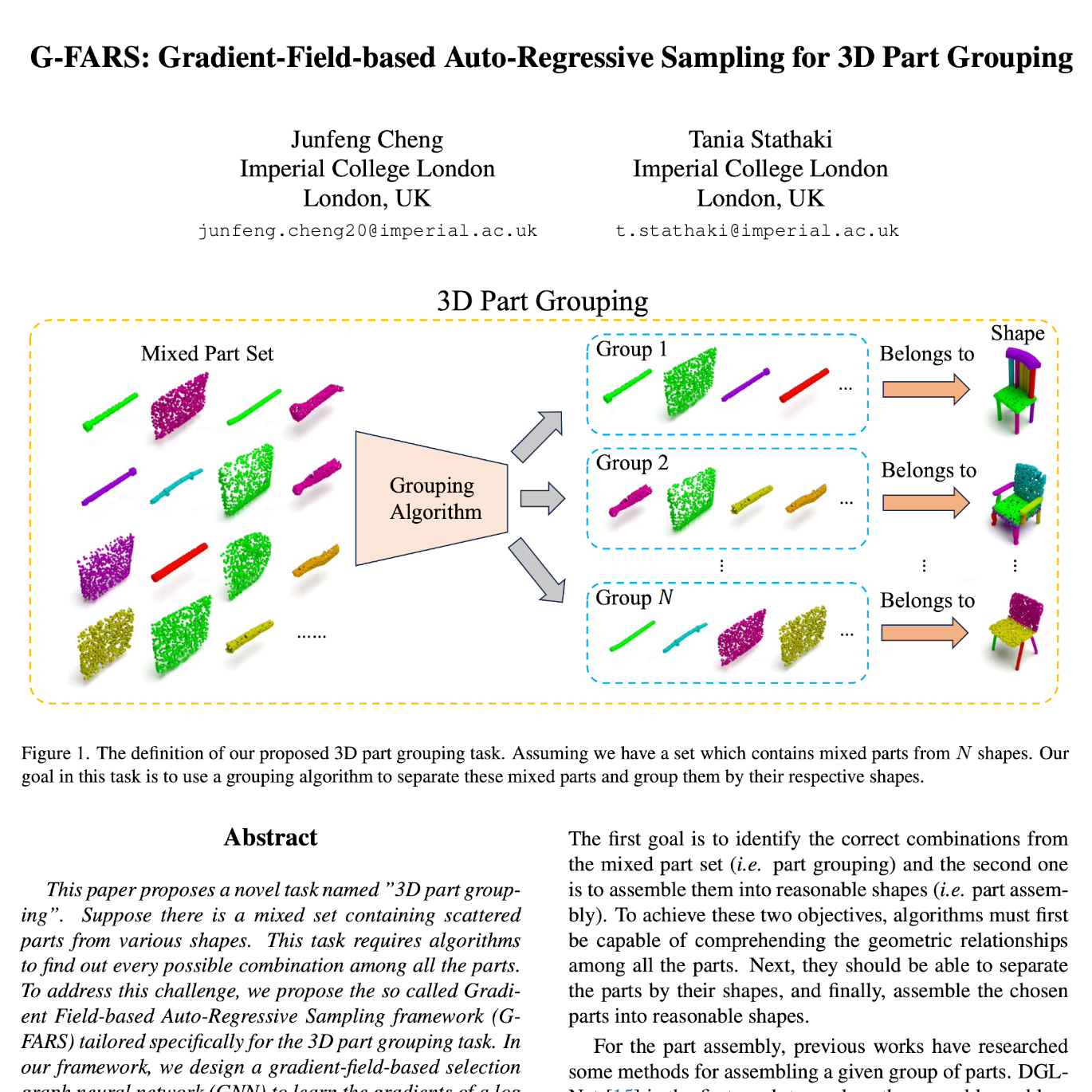

Minhyuk Sung is an assistant professor in the School of Computing at KAIST,

affiliated with the Graduate School of AI and the Graduate School of Metaverse.

Before joining KAIST, he was a Research Scientist at Adobe Research. He received his

Ph.D. from Stanford University under the supervision of Professor Leonidas J.

Guibas. His research interests lie in vision, graphics, and machine learning, with a

focus on 3D geometric data generation, processing, and analysis. His academic

services include serving as a program committee member in SIGGRAPH Asia 2022, 2023,

and 2024, Eurographics 2022 and 2024, Pacific Graphics 2023, and AAAI 2023 and 2024.

11:20

Invited Talk 411:20 AM - 11:55 AM

Jiajun Wu is an Assistant Professor of Computer Science and, by courtesy, of

Psychology at Stanford University, working on computer vision, machine learning, and

computational cognitive science. Before joining Stanford, he was a Visiting Faculty

Researcher at Google Research. He received his PhD in Electrical Engineering and

Computer Science from the Massachusetts Institute of Technology. Wu's research has

been recognized through the Young Investigator Programs (YIP) by ONR and by AFOSR,

the NSF CAREER award, paper awards and finalists at ICCV, CVPR, SIGGRAPH Asia, CoRL,

and IROS, dissertation awards from ACM, AAAI, and MIT, the 2020 Samsung AI

Researcher of the Year, and faculty research awards from J.P. Morgan, Samsung,

Amazon, and Meta.

12:00

Lunch break12:00 PM - 01:00 PM

01:00

Invited Talk 501:00 PM - 01:35 PM

Srinath Sridhar is an assistant professor of computer science at Brown University.

He received his PhD at the Max Planck Institute for Informatics and was subsequently

a postdoctoral researcher at Stanford. His research interests are in 3D computer

vision and machine learning. Specifically, his group (https://ivl.cs.brown.edu)

focuses on visual understanding of 3D human physical interactions with applications

ranging from robotics to mixed reality. He is a recipient of the NSF CAREER award, a

Google Research Scholar award, and his work received the Eurographics Best Paper

Honorable Mention. He spends part of his time as a visiting academic at Amazon

Robotics and has previously spent time at Microsoft Research Redmond and Honda

Research Institute.

01:35

Oral paper presentations01:35 PM - 03:00 PM

03:00

Challenges03:00 PM - 03:15 PM

A presentation of the 3DCoMPaT++ challenge winners and their solutions, and of the

VSIC challenge.

03:15

Coffee & Poster session03:15 PM - 04:00 PM

04:00

Invited Talk 604:00 PM - 04:35 PM

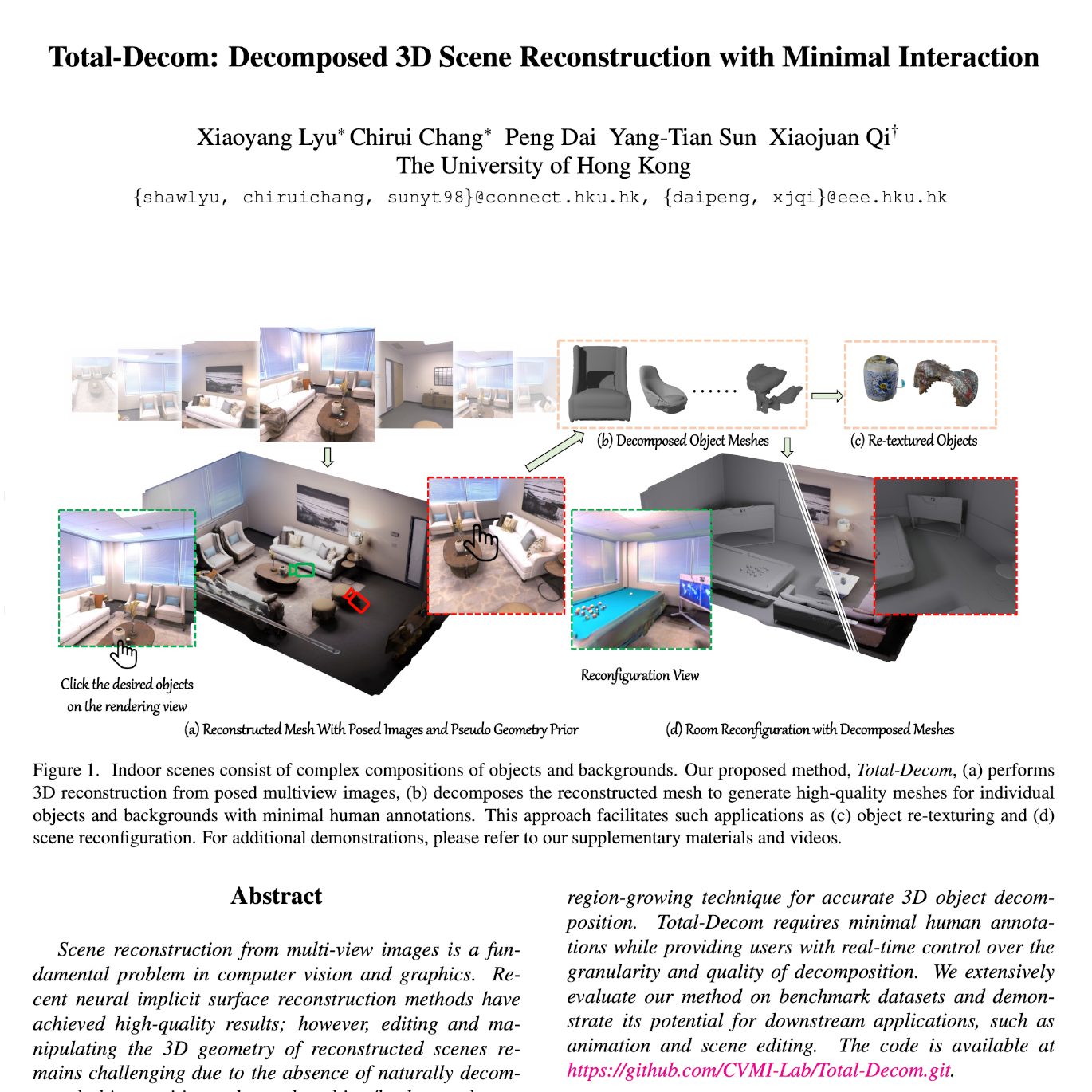

Qi Xiaojuan is an assistant professor in the Department of Electrical and Electronic

Engineering at the University of Hong Kong. She received her Ph.D. from the Chinese

University of Hong Kong and has worked and exchanged at the University of Toronto,

Oxford University and Intel Visual Computing Group. She is committed to empowering

machines with the ability to perceive, understand and reconstruct the visual world

in the open world and pushing their deployments in embodied agents.

04:35

Invited Talk 704:35 PM - 05:10 PM

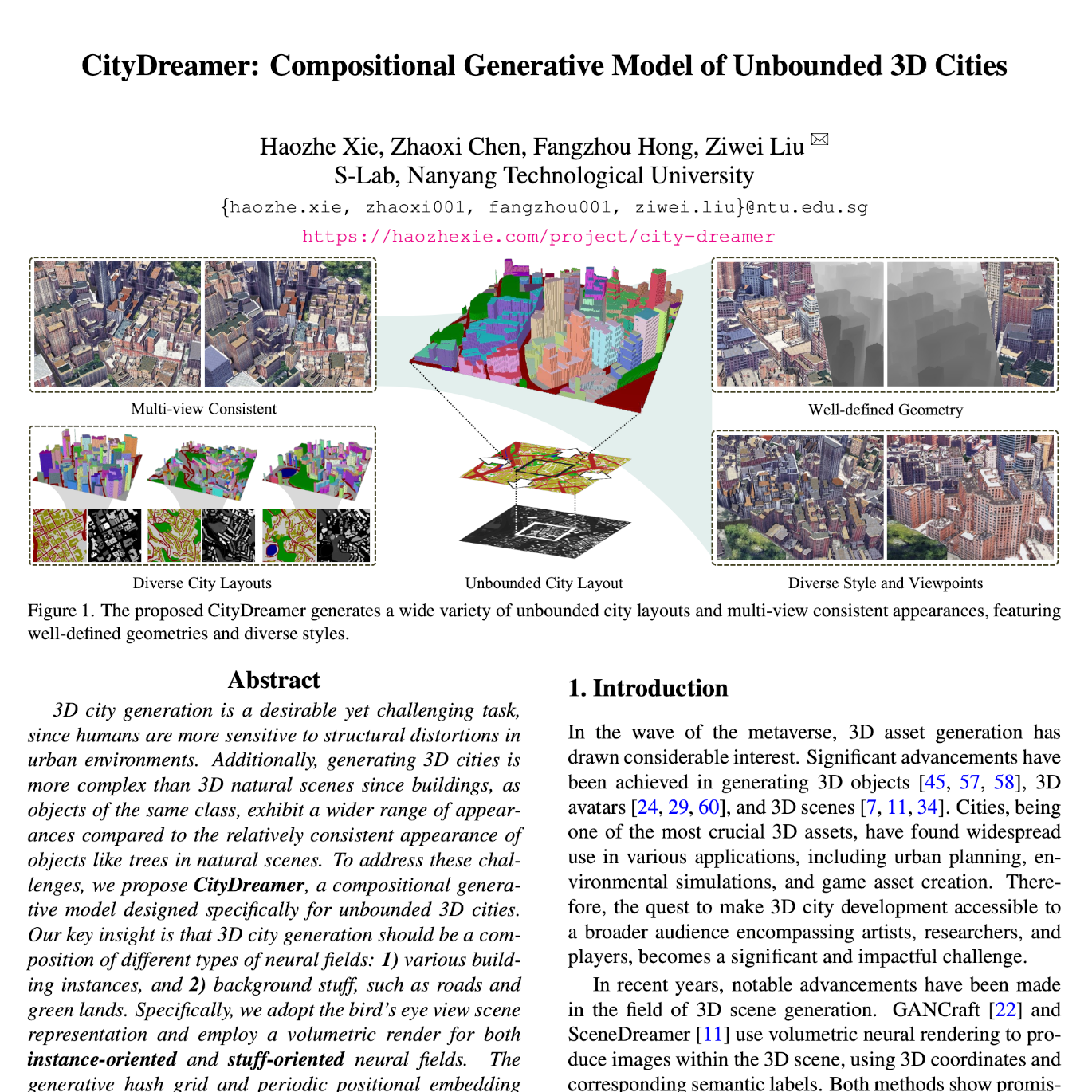

Prof. Dai's research focuses on attaining a 3D understanding of the world around us,

capturing and constructing semantically-informed 3D models of real-world

environments. This includes 3D reconstruction and semantic understanding from

commodity RGB-D sensor data, leveraging generative 3D deep learning towards enabling

understanding and interaction with 3D scenes for content creation and virtual or

robotic agents.

Prof. Dai received her PhD in computer science from Stanford in 2018 and her BSE in

computer science from Princeton in 2013. Her research has been recognized through a

ZDB Junior Research Group Award, an ACM SIGGRAPH Outstanding Doctoral Dissertation

Honorable Mention, as well as a Stanford Graduate Fellowship. Since 2020, she has

been a professor at TUM, leading the 3D AI Lab.

05:15

Panel discussion05:15 PM - 06:00 PM

A panel discussion on the future of compositional 3D vision, with the invited

speakers and other experts in the field.

Zoom Live Stream Link

Zoom Live Stream Link