2D-3D Dataloader: Matching modalities#

We show here how to easily combine the HDF5 pointcloud loader with the WebDataset 2D dataloader we provide.

from demo_utils_3D import *

from demo_utils_2D import *

import utils3D.plot as plt_utils_3D

ROOT_DIR_3D = "./3D_PC_data/"

ROOT_DIR_2D = "./2D_data/"

Fetching 2D renders and 3D pointclouds in the same dataloader#

To jointly iterate across pointclouds and 2D renders, we provide the FullLoader2D_3D example class, which fetches 2D renders from the datastream and matching 3D pointclouds form a provided compatible HDF5 dataloader.

Note

Note that the maximum number of compositions that can be loaded using the HDF5 we provide in our Download page is 10. If you want to use more 2D-3D compositions, you will need to extract your own HDF5 file following your use case.

"""

Dataloader for the full data available in the WDS shards.

Adapt and filter to the fields needed for your usage.

Args:

----

...: See FullLoader

loader_3D: The 3D loader to fetch the 3D data from.

"""

from compat2D_3D import FullLoader2D_3D

valid_loader_2D_3D = (

FullLoader2D_3D(root_url_2D=ROOT_DIR_2D,

root_dir_3D=ROOT_DIR_3D,

num_points=2048,

split="valid",

semantic_level="fine",

n_compositions=1)

.make_loader(batch_size=1, num_workers=0)

)

We can now simultaneously iterate over 2D renders and 3D pointclouds, accessing all relevant modalities from a unified dataloader:

# Unpacking the huge sample

for data_tuple in valid_loader_2D_3D:

shape_id, image, target, \

part_mask, mat_mask, depth, \

style_id, view_id, view_type, \

cam_parameters,\

points, points_part_labels, points_mat_labels = data_tuple

break

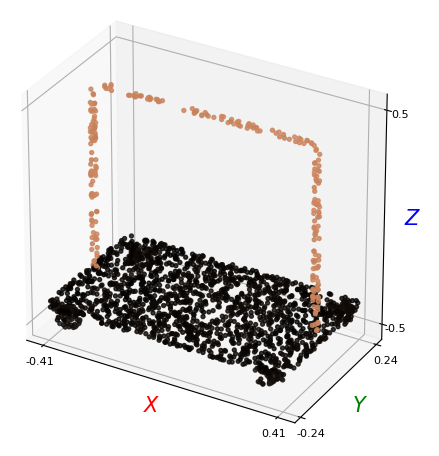

# Plotting the 3D pointcloud

points_xyz = points[:, :3]

points_col = points[:, 3:]

plt_utils.plot_pointclouds([np.array(points_xyz)],

colors=[np.array(points_col)],

size=5,

point_size=8)

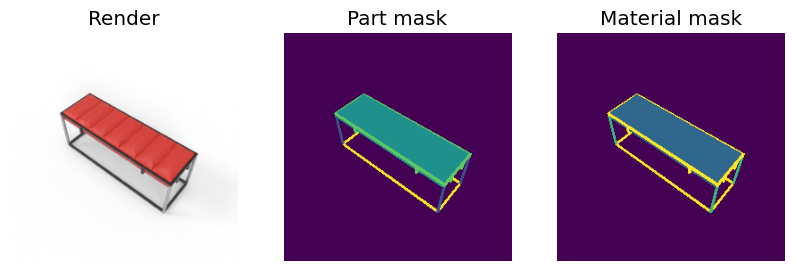

# Plotting the 2D image, its segmentation and the depth map

part_mask = mask_to_colors(part_mask)

mat_mask = mask_to_colors(mat_mask)

show_tensors([image, part_mask, mat_mask], size=10,

labels=["Render", "Part mask", "Material mask"])

Evaluation loader for 2D/3D#

Hint

The section below is not relevant, as we have now released the test set labels, as our CVPR 2023 challenge is over.

You can access the test data loader just as any other split, using the split="test" parameter.

For more information, please take a look at the documentation on the 2D dataloaders.

To account for the fact that 2D and 3D test data is stripped for any ground truth in our release, we provide a special dataloader combining EvalLoader for 2D with EvalLoader_PC.

This loader will provide:

a)the rendered viewb)the sampled pointcloudc)the view identifiers (view_id,shape_id,style_id)d)the associated depth mapf)the camera view parameters.

To iterate over the test dataloader, you need a special dataloader, EvalLoader_PC, which only fetches these fields form the data shards.

We illustrate below its usage:

"""

Dataloader for the full data available in the WDS shards.

Adapt and filter to the fields needed for your usage.

Args:

----

...: See FullLoader

loader_3D: The 3D loader to fetch the 3D data from.

"""

from compat2D_3D import EvalLoader2D_3D

aws_url = "https://3dcompat-dataset.s3-us-west-1.amazonaws.com/v2/2D/"

eval_loader_2D_3D = (

EvalLoader2D_3D(root_url_2D=aws_url,

root_dir_3D=ROOT_DIR_3D,

num_points=2048,

semantic_level="coarse",

n_compositions=1)

.make_loader(batch_size=1, num_workers=0)

)

# Unpacking the huge sample

for data_tuple in eval_loader_2D_3D:

shape_id, image, \

depth, \

style_id, view_id, view_type, \

cam_parameters,\

points = data_tuple

break

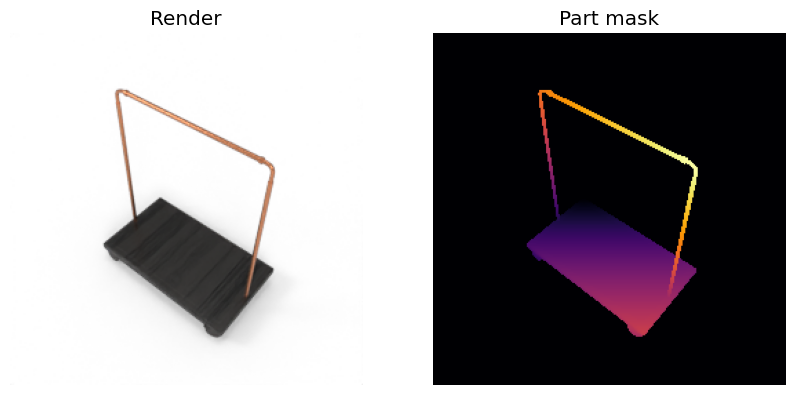

# Plotting the 2D image, its segmentation and the depth map

depth_map = depth_to_colors(depth)

show_tensors([image, depth_map], size=10, labels=["Render", "Part mask", "Material mask"])

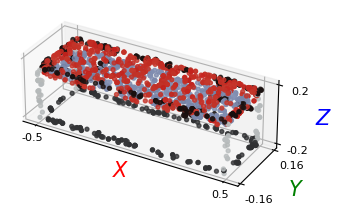

# Plotting the 3D pointcloud

points_xyz = points[:, :3]

points_col = points[:, 3:]

plt_utils.plot_pointclouds([np.array(points_xyz)],

colors=[np.array(points_col)],

size=11,

point_size=8)