2D Dataloader#

We give here a few pointers as to how to easily manipulate the 2D rendered data from the 3DCoMPaT dataset.

Hint

To properly run this notebook, you will have to download our 2D data using the provided download script. For more information, please check our Download documentation page.

ROOT_URL = './2D_data/'

from demo_utils_2D import *

from compat2D import *

Shape classification#

For the 2D shape classification task, we instantiate a simple ShapeLoader which only loads 2D rendered views.

The ShapeLoader is parameterized by the datasplit to be loaded, and by the number of compositions to use per shape.

We can instantiate a dataloader for all viewtypes using the demo data:

"""

Shape classification dataloader.

Args:

----

root_url: Base dataset URL containing data split shards

split: One of {train, valid, test}

semantic_level: Semantic level. One of {fine, coarse}

n_compositions: Number of compositions to use

cache_dir: Cache directory to use

view_type: Filter by view type [0: canonical views, 1: random views]

transform: Transform to be applied on rendered views

"""

# Making a basic 2D dataloader

shape_loader = (

ShapeLoader(root_url=ROOT_URL,

split="valid",

semantic_level="coarse",

n_compositions=1)

.make_loader(batch_size=1, num_workers=0)

)

# Iterating over the dataloader and storing the first 8 images

my_tensors = []

for k, (image, target) in enumerate(shape_loader, start=1):

my_tensors += [image]

if k==8: break

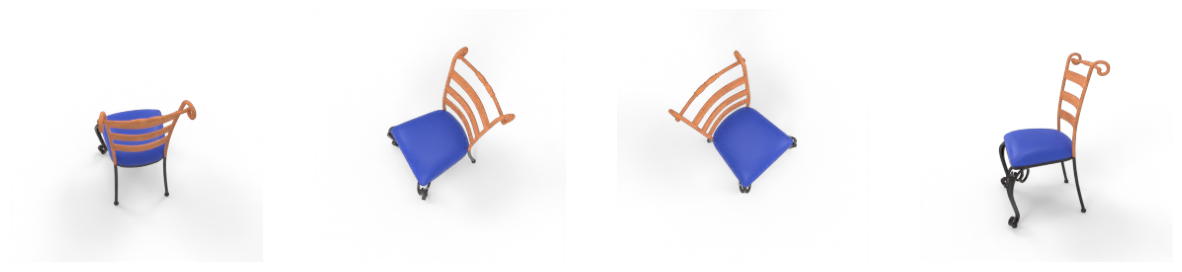

# Displaying the first 8 images (4 canonical views, 4 random views)

show_tensors(my_tensors, size=25)

We can also choose to select only canonical views or random views, using the view_type parameter.

A view_type parameter equal to 1 will only fetch random views:

# Making a filtered 2D dataloader

shape_loader_random = (

ShapeLoader(root_url=ROOT_URL,

split="valid",

semantic_level="coarse",

n_compositions=1,

view_type=1)

.make_loader(batch_size=1, num_workers=0)

)

# Iterating over the dataloader and storing the first 4 random views

my_tensors = []

for k, (image, target) in enumerate(shape_loader_random, start=1):

my_tensors += [image]

if k==4: break

# Displaying the first 4 random views

show_tensors(my_tensors, size=15)

Part segmentation#

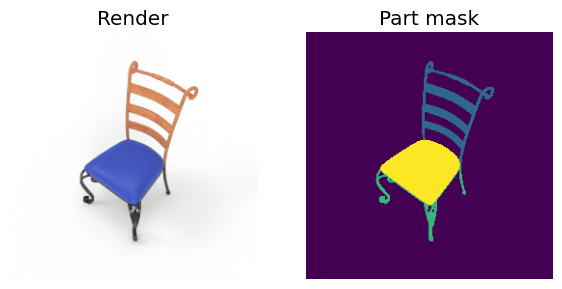

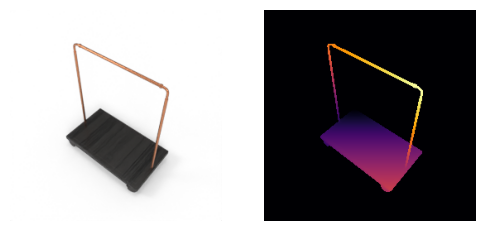

For the 2D part segmentation task, we want shape labels and a segmentation mask, alongside the mask code data.

The SegmentationLoader has the same parameters as the ShapeLoader, with the addition of custom funtions to be applied to the segmentation mask or mask code.

We display a rendering and its segmentation mask, alongside the mask metadata, for a single view:

"""

Part segmentation and shape classification data loader.

Iterating over 2D renderings of shapes with a shape category label,

and a part segmentation mask.

Args:

----

...: See CompatLoader2D

mask_transform: Transform to apply on segmentation masks

"""

# Making a basic 2D dataloader

part_seg_loader = (

SegmentationLoader(root_url=ROOT_URL,

split="valid",

semantic_level="coarse",

n_compositions=1)

.make_loader(batch_size=1, num_workers=0)

)

# Iterating over the loader and displaying the data

for image, target, part_mask in part_seg_loader:

print(">> Unique part mask values:", torch.unique(part_mask))

part_mask = mask_to_colors(part_mask)

show_tensors([image, part_mask], size=7, labels=["Render", "Part mask"])

break

>> Unique part mask values: tensor([ 0, 3, 4, 33])

Warning

For both part and material masks, note that 1 is added to the original index of each category to make room for the background class (identified by 0).

To match 2D and 3D label predictions, you can thus simply filter out background pixels (0-valued) and subtract 1 from 2D predictions.

Grounded Compositional Recognition#

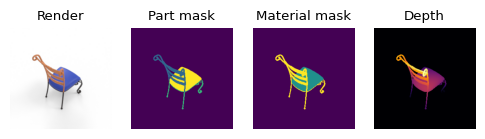

The GCRLoader provides part-material tuples in addition to all of the data provided by the part segmentation loader.

We highlight its usage in the code snippet below:

"""

Dataloader for the full 2D compositional task.

Iterating over 2D renderings of shapes with a shape category label,

a part segmentation mask and a material segmentation mask.

Args:

----

...: See CompatLoader2D

mask_transform: Transform to apply to segmentation masks

part_mat_transform: Transform to apply to part/material masks

"""

gcr_loader = (

GCRLoader(root_url=ROOT_URL,

split="valid",

semantic_level="coarse",

n_compositions=1)

.make_loader(batch_size=1, num_workers=0)

)

# Iterating over the loader and displaying the data

for image, target, part_mask, mat_mask in gcr_loader:

# print unique part_mask values

print(">> Unique part mask values:", torch.unique(part_mask))

print(">> Unique mat mask values:", torch.unique(mat_mask))

part_mask = mask_to_colors(part_mask)

mat_mask = mask_to_colors(mat_mask)

show_tensors([image, part_mask, mat_mask], size=10, labels=["Render", "Part mask", "Material mask"])

break

>> Unique part mask values: tensor([ 0, 3, 4, 33])

>> Unique mat mask values: tensor([ 0, 9, 13])

Full loader#

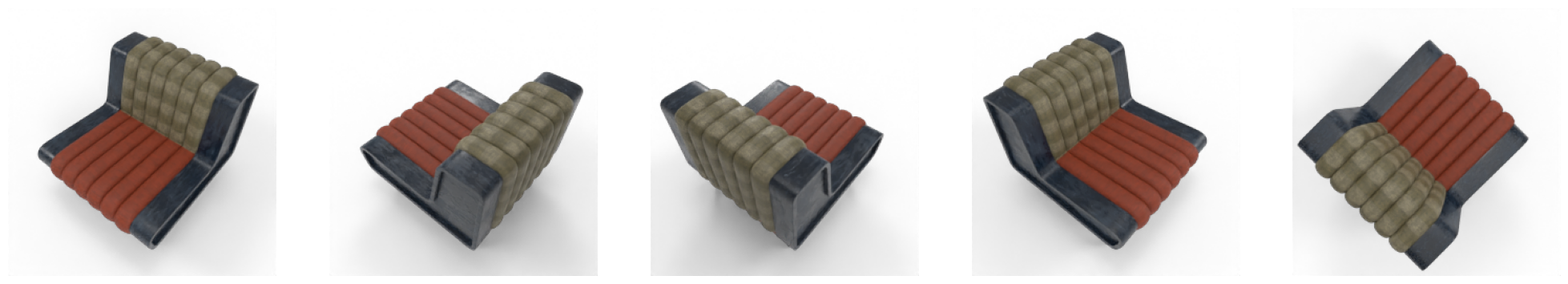

We also provide a complete dataloader that fetches all data available in the shards, including (additionally to the previous loaders):

camera parameters

depth maps

shape ids

view ids

view types

Feel free to adapt it to your needs by checking the source code. We highlight its usage in the code snippet below:

"""

Dataloader for the full data available in the WDS shards.

Adapt and filter to the fields needed for your usage.

Args:

...: See CompatLoader2D

mask_transform: Transform to apply to segmentation masks

"""

full_loader = (

FullLoader(root_url=ROOT_URL,

split="valid",

semantic_level="coarse",

n_compositions=1)

.make_loader(batch_size=1, num_workers=0)

)

# Iterating over the loader and displaying the data

for shape_id, image, target, part_mask, mat_mask, depth,\

style_id, view_id, view_type, cam_parameters in full_loader:

if view_id==0:

print(">> Shape id [%s], target class [%d], style id [%d], view_id [%d], view_type [%d]" %\

(shape_id[0], target, style_id, view_id, view_type))

print(">> Camera parameters shape:", cam_parameters.shape)

K, M = cam_parameters[0].chunk(2, axis=0)

part_mask = mask_to_colors(part_mask)

mat_mask = mask_to_colors(mat_mask)

depth_map = depth_to_colors(depth)

show_tensors([image, part_mask, mat_mask, depth_map], size=6,

labels=["Render", "Part mask", "Material mask", "Depth"],

font_size=8)

if view_id==5: break

>> Shape id [0c_1d3], target class [12], style id [0], view_id [0], view_type [0]

>> Camera parameters shape: torch.Size([1, 8, 4])

From the Web#

By using WebDataset as a backbone for our data storage, we enable the use of our data in any PyTorch learning pipeline without having to pre-download the whole dataset.

Set root_url to be the root of our 2D WebDataset shards on our S3 AWS bucket and you can directly start training on streamed samples, with no overhead.

# Defining the root AWS URL

aws_url = "https://3dcompat-dataset.s3-us-west-1.amazonaws.com/v2/2D/"

# Making a basic 2D dataloader

shape_loader = (

ShapeLoader(root_url=aws_url,

split="train",

semantic_level="coarse",

n_compositions=1)

.make_loader(batch_size=1, num_workers=0)

)

# Iterating over the dataloader and storing the first 5 images

my_tensors = []

for k, (image, target) in enumerate(shape_loader):

my_tensors += [image]

if k==4: break

# Displaying the first 5 images in the train set

show_tensors(my_tensors, size=25)

Hint

If your use case is not compatible with dataloaders, note that we only provide simple wrappers around the WebDataset API.

For a more tailored usage, please check compat2D.py and the official WebDataset documentation.

Evaluation dataloader#

Hint

The section below is not relevant, as we have now released the test set labels, as our CVPR 2023 challenge is over.

You can access the test data loader just as any other split, using the split="test" parameter.

For more information, please take a look at the documentation on the 2D dataloaders.

The test WebDataset shards that we provide are stripped of all ground truth data. They only contain:

a)the rendered viewb)the associated depth mapc)the view identifiers (view_id,shape_id,style_id)d)the camera view parameters.

To iterate over the test dataloader, you need a special dataloader, EvalLoader, which only fetches these fields form the data shards.

We illustrate below its usage:

# Making a basic 2D dataloader

eval_loader = (

EvalLoader(root_url=aws_url,

semantic_level="coarse",

n_compositions=1)

.make_loader(batch_size=1, num_workers=0)

)

# Iterating over the dataloader and storing the first 5 images

for k, (shape_id, image, depth, style_id, view_id, view_type, cam_parameters) in enumerate(eval_loader):

depth_map = depth_to_colors(depth)

print(">> Shape id [%s], style id [%d], view_id [%d], view_type [%d]" % (shape_id[0], style_id, view_id, view_type))

show_tensors([image, depth_map], size=6)

break

>> Shape id [28_071], style id [0], view_id [0], view_type [0]

Shape and style identifiers are provided in order to match streamed 2D images with the 3D RGB pointclouds.